ALEXA TRANSLATIONS 5.2 IS HERE: UNLOCK POWER, SPEED, AND CONTROL

We're thrilled to announce the launch of Alexa Translations 5.2! This update transforms our platform, giving you greater control over your dedicated translation environment, streamlining your entire workflow, and delivering unmatched security and speed.

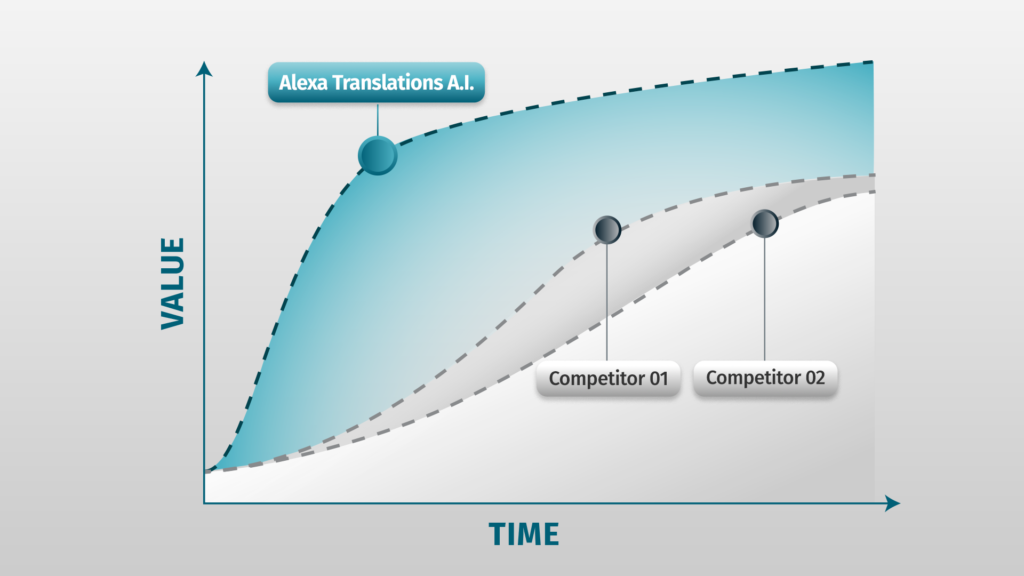

“The latest iteration of Alexa Translations A.I. raises the bar for the translation technology space. As market leaders, the impetus behind improving our technology is clear: make the product so intuitive it feels like a member of your team. 5.2 makes it easier than ever to deliver flawless, industry-specific translations without compromise.”

— Gary Kalaci (CEO, Alexa Translations)

Here's how 5.2 makes your life easier and your translations better:

Our Adaptive engines learn from edits in real time, and now you can kickstart that learning! You can pre-train your Adaptive engines by uploading previous translations and existing Translation Memories. This instantly turns your past work into reference files, to reduce manual fixes later.

See the Power of Pre-Trained Adaptive Learning:

When one client used adaptive engines, they saw the following after making edits to just 5 documents;

By pre-training your adaptive engines, you can accelerate this learning to see results faster. This frees your team to focus on high-value work like maintaining brand consistency and nuanced content, instead of terminology corrections.

“Our advanced Adaptive Translation is about to be supercharged with our new Pre-Training ability. Adaptive Translation has been a market leader since its launch earlier this year. It enables the translation engine to adapt to manual, human corrections in real time. Now with pre-training, the Adaptive engine learns from previous translations so it can produce accurate, contextual translations from day one, shortening the time-to-value for our customers.”

— Miki Velemirovich (Head of Product, Alexa Translations)

Our Automatic Formatting Restorer now uses advanced A.I. (LLMs) instead of rigid code. Beyond getting terminology and nuance exactly right, the system preserves fonts and styles, maintains complex layouts like tables, columns, and page breaks, and intelligently fixes broken or overlapping tags that can corrupt formatting. The platform intelligently learns your formatting preferences for more accurate outputs, resulting in correctly formatted translations from the start.

“Under the hood of 5.2, we have a Large Language Model (LLM) powering our automatic formatting feature. That means clients benefit from the most powerful A.I. solution to preserve preferences and fix errors from the original document.”

— Giovanni Iacovino (VP of Technology, Alexa Translations)

We've significantly upgraded our translation models, focusing on better output quality and meeting the strictest data security requirements.

PII-Safe Translation and Data Residency

To meet your security needs, both the INFINITE and NEURAL models ensure Personal Identifiable Information (PII) is processed only within our systems and never by third-party LLMs. For clients requiring additional regulatory assurance, the NEURAL model also offers full data residency with Canadian-based hosting.

Faster, Smarter NEURAL Model

We've improved the NEURAL model infrastructure to deliver tangible benefits:

Version 5.2 introduces features designed to make your translation process faster and more intuitive.

Scale Translations with “Retranslate”:

Forgot a key reference document? Use 'Edit Settings & Retry' to fix settings without re-uploading the file. Need the same file in a different language? Use 'Retranslate' to apply new settings instantly, no re-uploading needed!

Instant Document Insights:

Get an AI-generated document summary alongside a clear overview of all TMs, Glossaries, and settings applied to a translation. No more digging to confirm your configurations, saving you time on every project.

New Names for a Simpler Experience

We've updated our terminology to align with industry standards and simplify your experience:

Control and Customization

The 5.2 launch marks a major milestone in providing a highly configurable, secure, and accurate AI translation platform for your most sensitive and complex projects